The field of AI-driven video generation is rapidly progressing, and Alibaba’s Wan 2.1 (also known as Wanx 2.1) is a new arrival. While in-depth, confirmed information can be difficult to find, this article compiles the available details about Wan 2.1, investigating what it is, its capabilities, and implications for professional video editors. We’ll look closely at its core features, how it might stack up against competing AI video tools, and practical uses. We will cover limitations, prompt development, and the broader technological context.

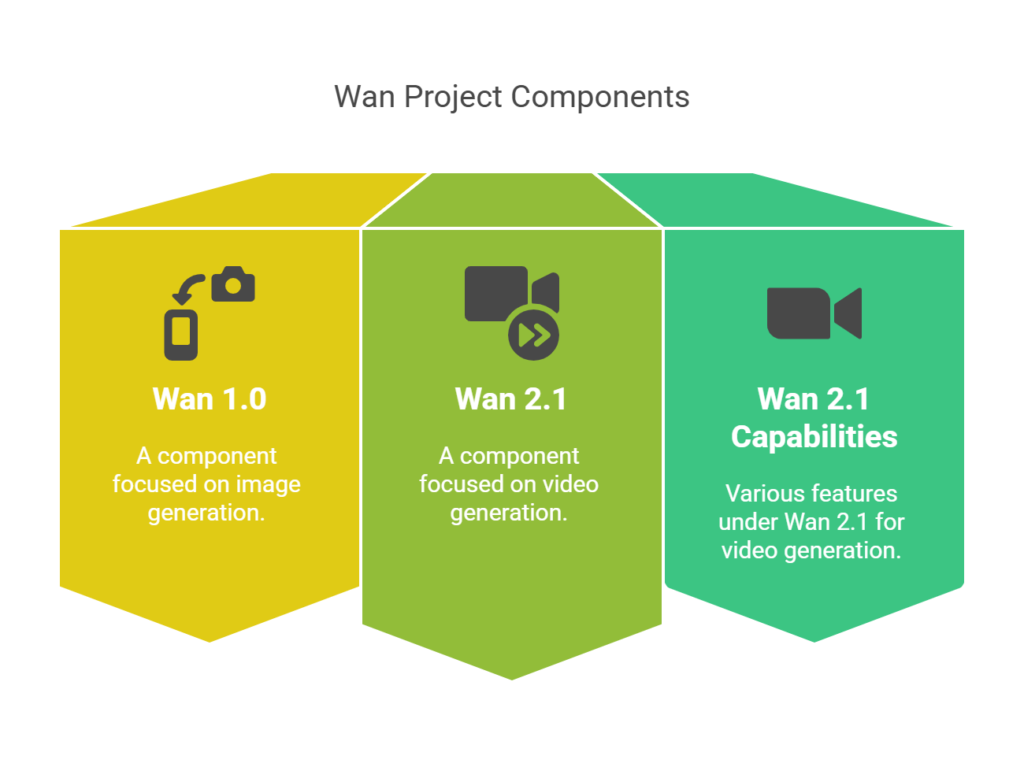

Understanding Alibaba’s Wan Project

The Wan project, from Alibaba’s DAMO Academy, signals a commitment to AI-driven content creation. It includes a number of models, with Wan 2.1 targeting video. The name, translating to “Myriad Images” or “All Things,” reflects its ambitious scope.

Previous iterations of Wan, namely version 1.0, focused mainly on image generation. Those earlier models were good at generating images from text prompts, with a knowledge of visual concepts and art styles. Wan 2.1 is based on this, but extends to video.

Specifics about the inner workings of Wan 2.1 aren’t public. It’s likely that it is a diffusion-based model, which is common. Diffusion models add noise to an image or video and then reverse the process, to generate new content based on a prompt.

A key selling point of the Wan project, could be Wan 2.1’s focus on both English and Chinese. This could make it usable by a larger audience.

The broader idea of the Wan project seems to be an entire set of tools. Wan 2.1 is a key component, offering ways to generate video and, possibly, streamline the video production process.

Features and Potential of Wan 2.1

While specific details on Wan 2.1’s features are limited, we can guess at some capabilities, based on the Wan project, and AI video generation trends.

- Text-to-Video Generation: This is the main function. Users can enter text prompts that describe a scene or action, and Wan 2.1 will try to make a video. The quality, length, and visual coherence are key factors that are unknown.

- Image-to-Video Generation: Wan 2.1 will probably support the creation of videos from stills. This could be animating an image, lengthening it, or creating image variations.

- Style Control: Wan 2.1 is expected to have some user control over the visual style. This could include art styles (e.g., “painting,” “photo,” “anime”) or calling out specific art styles.

- Dual Language Support (Likely): Because the Wan project focuses on both Chinese and English, Wan 2.1 is expected to allow prompts in both languages.

- Video Editing (Possible): Some suggest video editing features within Wan 2.1. This could be in-painting (editing part of a video), out-painting (extending the edges of a video), or style transfer (changing a video’s look).

The full capability, will become more obvious as information and Wan 2.1 access increase.

How Wan 2.1 Compares to Other AI Video Tools

AI video generation is increasingly competitive. Comparing Wan 2.1 helps to determine its place.

- RunwayML (Gen-1, Gen-2): RunwayML has AI-powered video editing and generation tools. Its Gen-1 and Gen-2 models are available and give features like video-to-video editing and text-to-video. Wan 2.1 may compete, potentially with perks in specific areas, including dual-language.

- Pika Labs: Pika Labs provides a platform for AI video. It focuses on being user-friendly and accessible. Wan 2.1, like other Alibaba projects, might emphasize better technical features and output control.

- Sora (OpenAI): Sora received a lot of attention for making very realistic and detailed videos. While its accessibility is limited, it sets a high bar. Wan 2.1 will need a similar visual standard.

- Google’s Veo: Veo focuses on using film methods and making longer videos. Wan 2.1 needs to compete by doing something different.

Wan 2.1’s selling points could be:

- Dual-Language Support: This is important in a worldwide market.

- Focus on Chinese Aesthetics: The Wan project may use knowledge of Chinese art, culture, and visuals, for unique output.

- Connection with Alibaba’s Systems: Wan 2.1 might connect with other Alibaba services.

The real measure of success will be in how high the quality is, how easy it is to use, and whether or not it does something other tools don’t.

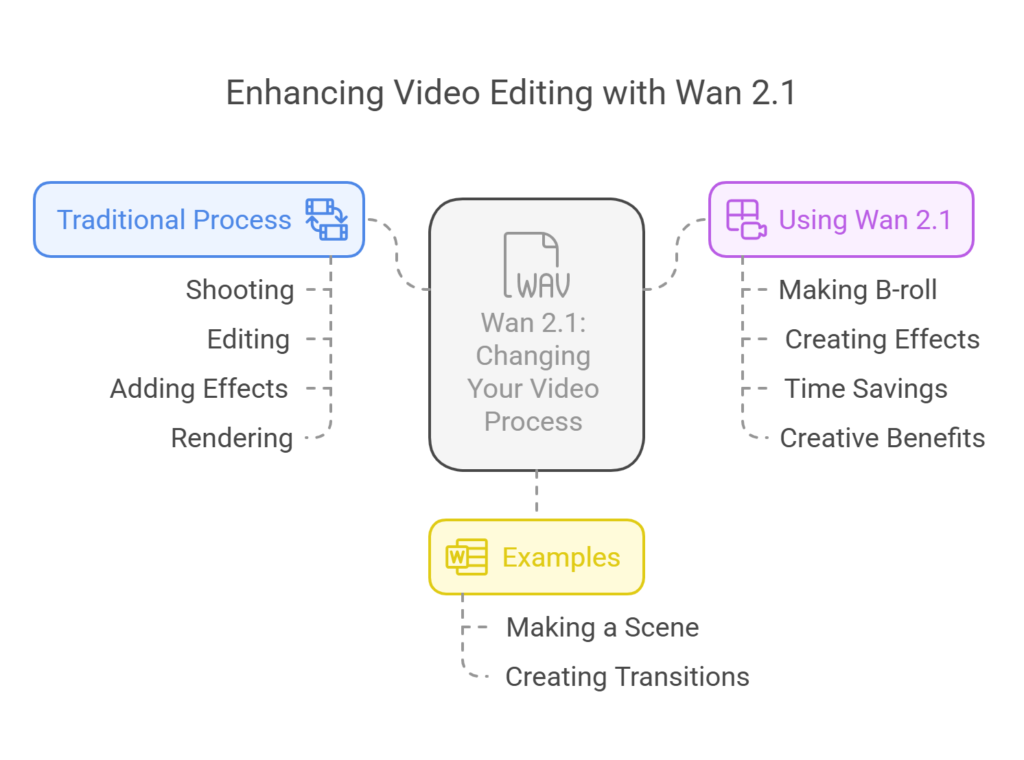

Practical Applications for Video Editors

If Wan 2.1 does what it should, it might offer a number of uses:

- B-Roll Creation: Quickly get extra footage. If you need a shot of something specific, Wan 2.1 could create it, saving money and time.

- Concept Planning: Testing video ideas before production. Editors could use Wan 2.1 to plan out scenes, angles, and styles.

- Making Effects: Creating strange imagery that might be difficult to film. This gives options for small budget projects.

- Filling Video Gaps: Getting short clips to cover missing parts of a video or to make transitions.

- Generating Variations: Creating many versions of a scene with altered lighting, styles, or composition, before making a final decision.

- Social media content creation: Quickly getting a range of clips for social media.

These are only a few of the potential applications. The best ways it will be used will depend on how good Wan 2.1 turns out to be.

Limitations and Ethical Considerations

Wan 2.1 is going to have limitations and ethical concerns.

- Quality and Consistency: Making good, coherent video is difficult. Early AI video models had problems maintaining consistency. Wan 2.1’s quality is unkown.

- Processing Time: Creating video uses a lot of processing. Wan 2.1’s speed is important for ease of use.

- Bias: AI models are trained on data, and this data may be biased. This can show in generated content, with undesirable or inaccurate results.

- Copyright: Using AI-generated content raises questions about copyright and ownership. Legal use of Wan 2.1 is important.

- Potential for Wrong Use: The tech could be used to create deepfakes or other wrong content. Responsible use is a most.

- Accessibility: It is not known if, or when, Wan 2.1 will be available for testing or purchase.

Addressing these concerns is important for Wan 2.1’s development.

Prompt Engineering Strategies for Wan 2.1

We can use some general ideas for prompting, until we know more.

- Be Specific: Vague prompts make vague output. Give detailed scene, object, action, and style descriptions. For example, “a city street at knight, with neon signs reflecting in puddles, light rain, a person walking with an umbrella.”

- Use Keywords: Use keywords that describe video elements. This could be camera angles, lighting, colors, feelings, and actions.

- Call out Style: If you want a specific look, say so. This could include referencing artists, movie genres, or art types.

- Experiment: Do not expect to get it correct immediately. Try different prompts, with changes to discover what works best.

- Use Both Languages (If Available): If Wan 2.1 works in English and Chinese, try prompts in both to find differences.

Good prompt writing improves with practice. Understand how the model interprets language to effectively direct it.

Quick Takeaways

- Wan 2.1 is Alibaba’s AI video model, from the larger Wan project.

- It comes after image generation model Wan 1.0.

- Expected features are text-to-video, image-to-video, and style control, with language support for English and Chinese.

- Applications for video editors could be b-roll, concepting, and effect creation.

- The model is in development, so full access and details remain unclear.

- All AI model use has to consider ethics.

- A key to making content with these models is making a good prompt.

Conclusion

Wan 2.1 is an advancement in video generation by AI. Details remain to bee seen, but it is important for video editors, because of Alibaba’s other work. Chinese and English support may give it an edge. It is important to assess Wan 2.1’s practical features, problems, and ethics. Video editors should keep up with Wan 2.1 to remain on top of the profession, and to continue to stay competent as the field changes.

Frequently Asked Questions

- When may I try Wan 2.1? You can use it now, Here.

- Is Wan 2.1 free? There is currently no pricing information. Wan 2.1 may have a free trial, subscription, or other pricing.

- Can I use Wan 2.1 for editting my videos? Some articles suggest editing, like in-painting or style transfer, but nothing is official.

- How does Wan 2.1 stack up against Open AI’s Sora?

Comparisons are difficult, since we can’t test both. Sora is very good, so it sets expectations for Wan to.1.